The Last School is an experimental documentary that explores the complexity of Hong Kong exodus through themes of identity and memory. Inspired by the Goldfish Street in Hong Kong, where fish suspended in plastic bags await an uncertain fate, the work is presented on a 360 degree projection space, consisting of three curved screens and a floor projection, creating a dynamic, enveloping space that mirrors the fluid, often disorienting experience of migration.

By using immersive visuals and sound, the work aims to transform documentary elements into a contemplative experience. It invites the audience into a poetic and open-ended space where personal and collective memories can be reflected upon. It asks how we carry identity across distances and how we hold on to belonging when home no longer feels like home.

The Last School was showcased in the Enhanced Immersion Studio at the ASU Media and Immersive eXperience (MIX) Center on April 11, 2025.

Tech Stack

Content Creation: Adobe Premiere Pro, After Effects, Illustrator, Audition, TouchDesigner, Meshy AI

System Integration: Pixera, Spacemap Go, QLab

Projection Mapping

The installation used five Epson EB-PU2216B projectors: one projector for each of the three curved screens and two projectors for the floor. Each projector was equipped with an Epson ELP-LU04 lens, which allowed for a bright, large image to be projected from a relatively short distance.

Each of the curved screens in the installation was constructed by combining two smaller curved frames, with fabric stretched tightly over them. While I was working on projection mapping, I realized that this setup introduced subtle irregularities, the curves were not perfectly smooth and the connecting seam between the two parts of each screen created visual tension during projection. It made it difficult when working with precise visual elements like text, subtitles, or animations with strong lines. The default projection mapping tools, particularly the FFD Modifier, allowed basic warping with segment divisions (I used 4x4x1), but that was not sufficient to align the visuals cleanly along the seams. FFD was ideal for general shaping, but not granular enough to make the connecting edges feel visually coherent, pixels near the seams tended to converge or bend unnaturally, especially noticeable when displaying horizontal or vertical lines. That’s where the Vertex Modifier became essential. With the Vertex tool, I could go in and manipulate individual control points on the mesh, making micro-adjustments to stretch or compress certain areas so that lines could appear as straight and uninterrupted as possible.

For the floor projection, since I was using two projectors to cover a larger area, I had to blur the overlapping part in the middle of the space. One of the projectors was slightly tilted so I also did a little bit of warping to align the images on two sides.

Media Server Programming

Pixera was used as the media server, with OSC commands from QLab triggering precise cue playback. Each screen has its own layer in the timeline and I imported the media that were rendered in HAP codec. Synchronization between the audio and all the screens is very important so the media were all rendered in full length with transitions, so all I had to make sure was just putting them at the same starting point with the Play Cue that could be triggered by the OSC command via QLab. At the same time, arrangements done with Spacemap Go would also be triggered.

One of the challenges I faced was unstable playback performance. I encountered persistent flickering issues, especially when playing back via QLab, even though all files had been exported in HAP codec to optimize real-time performance.

A temporary workaround involved hovering the mouse in circular motion (with the middle mouse button pressed) over the Pixera preview window, which appeared to suppress the flickering. However, this is not ideal for long-term installation.

At the time of documentation, the exact cause of the flickering has not been identified. Several potential contributing factors have been considered:

- Pixera's frame blender is active by default, which may cause conflicts when playing back footage originally created at 25 frames per second on a system operating at 30 frames per second, leading to frame interpolation issues. However, I have tried playing back 30 fps footage and it did not make an obvious difference.

- Pixera might undergo an extended transcoding process upon the initial import of media files as the flickering issue seemed to decrease over time. However, I was told that the transcoding process is only for preview files, the final outputs should be playing back the native file.

Given these uncertainties, further technical investigation would be necessary to fully resolve the issue.

Migration to VR Environment

Following the physical exhibition, the next phase of the project will involve migrating the installation into a Virtual Reality (VR) environment. The VR adaptation will replicate the setup of the original installation. VR offers the potential to preserve the experience of being enclosed within shifting emotional and political landscapes, even outside of a large physical installation space. It makes the experience more accessible, individuals from different regions will have the opportunity to experience the work firsthand.

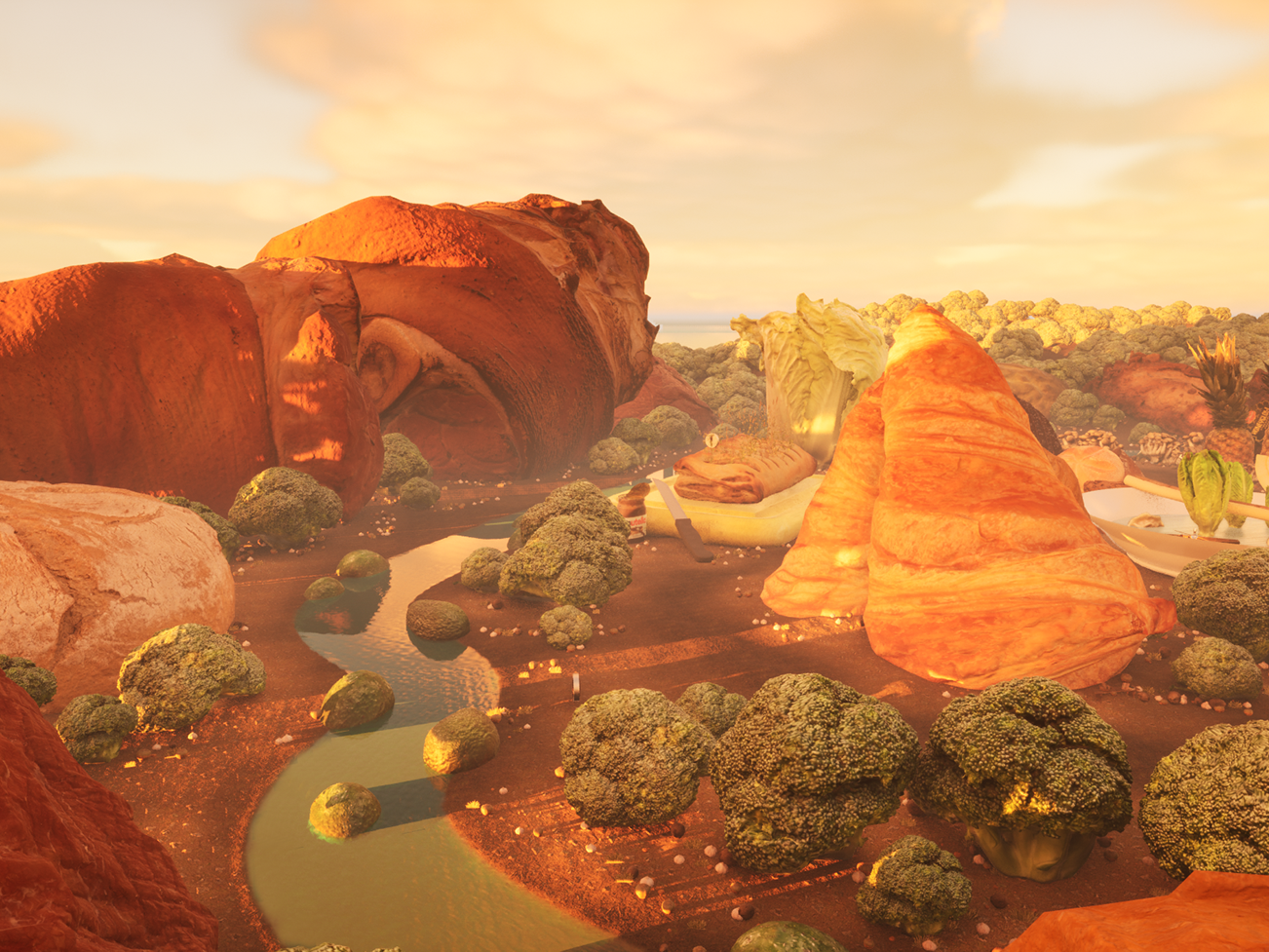

Currently, I have modeled a virtual environment in Unreal Engine, mapping the video sequences onto screens within the virtual space to recreate the three-screen setup of the physical installation.

The next step is to implement a blueprint system that allows all the sequences to synchronize and play together, replicating the timing and spatial experience of the original show. In the virtual environment, the viewer will be positioned at the center of the three screens, similar to the way audiences were placed in the physical installation. Spatial audio will need to be rebuilt to match the VR environment, with the possibility of adding head tracking to create a more immersive and responsive soundscape.

I am also planning to explore the RealityKit for adaptation to the Vision Pro.